The day I learned what my CPU could do

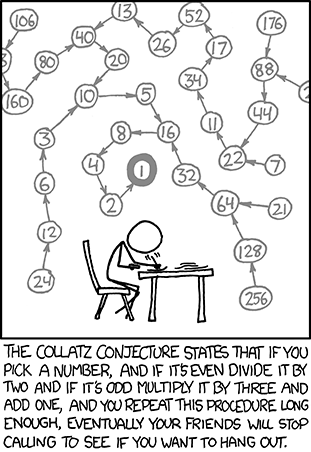

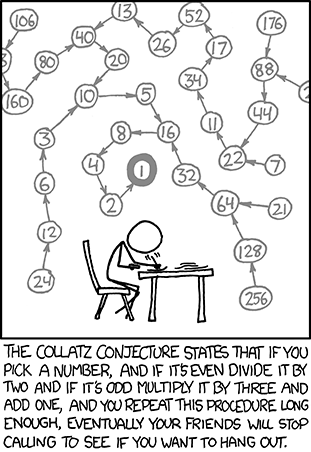

Mar. 5th, 2010 08:38 amToday's xkcd brought back memories.

I first ran across this problem at about the age of 14, in a book called something like Fun With Your Calculator. (Yes, I was one of those 14-year-olds.) There, it was called the Hailstone Number Problem, and was phrased as a challenge. For any number N, which is the number k in the range 1..N that takes the most iterations to reach 1? The book suggested that you try to find the answer for N=1,000.

This was very simple for even a budding programmer like me to test, so I set to work with the only computer I had - an HP-41 programmable calculator. It turns out that you only need to test N/2..N because if any number in the range 1..N/2 had the longest sequence, then starting with a number twice as big (i.e. in the range N.2..N) would have a sequence one step longer. The answer, if I recall correctly, is that k=871 leads to a 178-step sequence to reach the 1-4-2-1 loop.

On the HP-41, the program to test this took 4 hours to run. So I tried it on an Commodore PET in the computer club at school I'd just been allowed to join, where it took about an hour in PET BASIC. An implementation in Pascal on an Apple II took about 40 minutes. We then got a BBC Model B at home, and in BBC Basic the program took 10 minutes to spit out the answer.

At this point I was getting interested in machine code. The Collatz/Hailstone sequence was clearly amenable to simple integer arithmetic. In fact, thinking about it I could see that it could be very efficiently coded. Testing if a number is even is trivial (is the rightmost bit zero?) and so was dividing by two if it was - just execute a right shift of one bit. Multiplying by three and adding one was only slightly harder, since 3X+1 is just X+2X+1, and 2X is just X left-shifted. So save the accumulator to a temporary register, left-shift it, add the contents of the temporary register and add 1.

Now, I was learning to program on a 6502-based machine, so all the arithmetic was 8-bit, meaning that I had to learn how to handle multi-byte binary arithmetic. The problem was also made a bit more complex because my program had an inner loop, that calculated the length of cycle for each value k, and an outer loop that incremented k from N/2 to N and monitored which k had the biggest cycle length. To keep matters simpler, I just coded the inner loop in machine code, using BBC BASIC's inline assembler, and kept the outer loop in BASIC. Even so, I expected quite a speed improvement over my then best time of 10 minutes. I debugged the program, gave it some trivial test inputs to prove that it worked, and then ran it with N=1,000.

"871 takes 178 steps" it spat out, after two seconds.

And that was my first introduction to the performance benefits of programming in raw machine-code, at least in comparison to interpreted high-level languages.

More on Collatz Numbers.

I first ran across this problem at about the age of 14, in a book called something like Fun With Your Calculator. (Yes, I was one of those 14-year-olds.) There, it was called the Hailstone Number Problem, and was phrased as a challenge. For any number N, which is the number k in the range 1..N that takes the most iterations to reach 1? The book suggested that you try to find the answer for N=1,000.

This was very simple for even a budding programmer like me to test, so I set to work with the only computer I had - an HP-41 programmable calculator. It turns out that you only need to test N/2..N because if any number in the range 1..N/2 had the longest sequence, then starting with a number twice as big (i.e. in the range N.2..N) would have a sequence one step longer. The answer, if I recall correctly, is that k=871 leads to a 178-step sequence to reach the 1-4-2-1 loop.

On the HP-41, the program to test this took 4 hours to run. So I tried it on an Commodore PET in the computer club at school I'd just been allowed to join, where it took about an hour in PET BASIC. An implementation in Pascal on an Apple II took about 40 minutes. We then got a BBC Model B at home, and in BBC Basic the program took 10 minutes to spit out the answer.

At this point I was getting interested in machine code. The Collatz/Hailstone sequence was clearly amenable to simple integer arithmetic. In fact, thinking about it I could see that it could be very efficiently coded. Testing if a number is even is trivial (is the rightmost bit zero?) and so was dividing by two if it was - just execute a right shift of one bit. Multiplying by three and adding one was only slightly harder, since 3X+1 is just X+2X+1, and 2X is just X left-shifted. So save the accumulator to a temporary register, left-shift it, add the contents of the temporary register and add 1.

Now, I was learning to program on a 6502-based machine, so all the arithmetic was 8-bit, meaning that I had to learn how to handle multi-byte binary arithmetic. The problem was also made a bit more complex because my program had an inner loop, that calculated the length of cycle for each value k, and an outer loop that incremented k from N/2 to N and monitored which k had the biggest cycle length. To keep matters simpler, I just coded the inner loop in machine code, using BBC BASIC's inline assembler, and kept the outer loop in BASIC. Even so, I expected quite a speed improvement over my then best time of 10 minutes. I debugged the program, gave it some trivial test inputs to prove that it worked, and then ran it with N=1,000.

"871 takes 178 steps" it spat out, after two seconds.

And that was my first introduction to the performance benefits of programming in raw machine-code, at least in comparison to interpreted high-level languages.

More on Collatz Numbers.